A guide to improving your site’s SEO: Part Two - Getting technical

As we discovered in Part One of this guide, Search Engine Optimisation (SEO) is a carefully planned balancing act which involves bringing together content creation, web development and digital marketing.

In this second section of our three-part guide, we aim to demystify some technical terms and provide practical tips that can be carried out on your website.

If you’re a developer, you will probably be familiar with some of these tips already.

If you are a website owner or digital marketer with an interest in the more technical aspects of running a website, don’t be scared! Many of these adjustments are simple to do if you are prepared to invest some time understanding how websites work.

1. Do you speak Robot?

Photo by Hello I'm Nik on Unsplash

All search engines use software called ‘robots’ which carry out automated visits to websites.

Also known as crawlers or spiders, these robots are designed to follow links and uncover every dark and dusty corner of the internet. They help search engines record and store the content they find in a database called a search index.

There’s simple text file that can be placed inside the root folder of a website called robots.txt which is aimed at robots who visit the website.

No surprises there.

The purpose of this file can range from plain and simple disallow to more complex variations, and provides some influence for website owners over which parts of the website they would like to be visible in search engine results.

Some examples of how robots.txt can be used:

- You want your website to appear in Google results, but not in Bing

- You want certain pages or areas of your website to be ignored by search engines (this can be useful where there is duplicate content might exist for example in multiple categories)

- You want to prevent certain images within your website from being indexed

- You want to indicate to a robot where to find your website’s sitemap.xml

While this may sound complicated, creating the robots.txt can be done simply by using notepad.

If you want to disallow all robots from indexing a website (for example if a website is in a beta or test environment) you could add the following to your file:

User-agent: *

Disallow: /

The first line targets all robot visits.

The second line tells them not to visit any pages below the homepage, effectively telling them to go away, for now.

For more information on robots.txt, get it straight from the horse’s mouth.

2. Add some structure

As the name implies, structured data is a way to add detailed information about the contents and structure of a page or series of pages.

While it is not visible to human readers (unless you like reading html source code!), it is very useful because it helps us find the content we are looking for.

To a search engine robot, this extra data can provide context and meaning, and help search engines understand and record what pages are about and display them prominently when people search for those specific things.

An example of the positive effect of structured data is Google’s results formats of rich or featured snippets, indented result sets and knowledge graph panels, for example.

If your website contains any of the following types of information, you could consider using structured data to help boost the position of your pages.

- Articles

- Books

- Celebrities

- Courses

- Events

- Jobs

- Movies and music

- People

- Products of any type

- Recipes

These are just some common examples, there are thousands of defined categories and subcategories for structured data for almost any information you could think of.

Example of the way Google displays certain types of results based on data - in this case a movie result for iRobot.

In the future structured data is likely to become increasingly important to help us find exactly what we’re looking for among billions of pages.

There are a few different formats for structured data.

One of the most popular is JSON-LD, which is recommended by Google; Microdata and RDFa are also supported.

Adding structured data to your pages is a task probably best done by a web developer, however some CMS products such as Wordpress have plugins and extensions that can assist in this via a visual editor.

For information about structuring your content, check out schema.org.

3. Sitemaps

You can often find two kinds of site map on a website.

One is a page that users can visit to see a visual representation of the website (usually just a bulleted list of links) and is really only useful as a navigation tool for larger websites with lots of content. In many websites this kind of sitemap doesn’t add much value, especially if the navigation is the ‘megamenu’ type.

The second, possibly more useful type of sitemap is a data file which provides information about your website’s pages to robots rather than humans. This file is called sitemap.xml and the sensible way to create it is via your Content Management System (CMS). This way, whenever your content changes, your sitemap.xml will be automatically updated to reflect the changes.

By validating and submitting the address of your sitemap.xml to Google’s Search Console you can provide some extra information about your pages to Google such as a list of all the main pages of your website, when they were last updated and how often they are likely to change.

You can also advise Google about images, videos, languages and other media within a sitemap.

While neither type of sitemap are essential for most websites, they are not very time consuming to create and can help understand how Google ‘sees’ your site, especially if it’s a brand new website which is being indexed for the first time.

4. Google search operators

If you work in digital, it’s worth spending some time familiarising yourself with some of the lesser known Google search operators.

It’s one of the easiest research techniques, and best of all, it’s one you don’t have to be a techie to learn.

Search operators are simply terms or phrases that you enter into Google search, to get more specific or extended sets of results back.

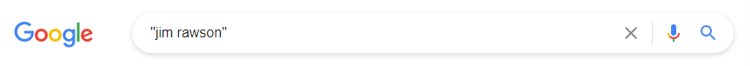

A basic operator search example could be:

By placing a phrase inside inverted commas, users can tell google they want results with a specific phrase. Without commas, the search could return more pages with the words appearing separately.

But search operators get more interesting when you use advanced syntax.

These operators allow you to:

- search for results with and without specific phrases or words

- search for pages that contain phrases in specific parts of the page like the title, links or body of the article

- search for pages where specific words appear near each other

- search a specific website for the occurrence of specific phrases

- and much more

This means you can use operators for keyword research activity including finding plagiarised content, mentions in blogs and news, keyword occurrence, synonym research, and much more.

Confused?

Let’s have another example.

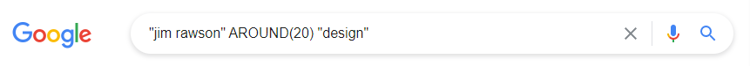

I’m responsible for – and interested in – design. So I’d like to know if I’m mentioned in this context.

So, using operators, I can put together a very specific search which to Google, means: ‘show me pages where design is mentioned within 20 words of Jim Rawson.’

It looks like this.

The results tell me that I still have a bit of work to do to raise my online profile.

There are many other useful and flexible search operators – you can find a full list, and more examples, here.

5 Performance

Is your website slow and heavy, or lightweight and fast?

Elephant Photo by Wolfgang Hasselmann. Cheetah photo by Cara Fuller, both on Unsplash

The performance of a website has a bigger influence than ever before on the position of its pages in Google search results.

Why?

Google wants website owners to create sites that load quickly on mobile devices, and that means keeping pages as small and efficient as possible.

The following considerations can all help you achieve a better performing website or web app, and it’s likely you’ll need to work with a web developer to get the best outcome, unless you have developer skills yourself.

Compression & minification

By compressing and optimising certain parts of your website code and assets, such as images, you can reduce the overall size of pages. There are a number of specialised tools and techniques to help do this.

Google’s own Page Speed Insights and Lighthouse tools can help assess how well your page assets are compressed and minified.

According to Google’s web.dev platform, it’s also worth considering next-gen image formats such as WebP and JPEG XR which have superior compression options when compared against older standards such as JPEG and PNG.

Reduce render-blocking scripts

Most websites contain pieces of code called JavaScript that help with certain functions on a website. However care should be taken not to include too many scripts that slow down the initial loading of the page.

Browser caching

Caching is something that your web browser does so that it doesn’t have to fully load all web content each time it visits a website. Commonly a browser will store certain images and content the first time it visits a page, which helps speed up future browsing.

Server response time

Server response times depend on many different contributing factors ranging from the infrastructure itself, the platform it runs to the configuration and databases hosted on it. High-performing servers should be able to reduce the ‘Time to First Byte’ (TTFB) which is the amount of time it takes from receiving the page request to the user’s browser receiving the first data.

Again, Google Lighthouse is a useful tool to establish your page’s current TTFB.

Content Distribution Networks (CDN)

CDNs can help website performance by storing and distributing content in numerous global locations, thereby ensuring your website is equally performant in London as it is in Tokyo.

Google has also come up with a mobile-optimised page format called AMP (accelerated mobile pages) which are automatically stored in the Google AMP CDN and can be a good way to publish news and blog content.

Google has also come up with a mobile-optimised page format called AMP (accelerated mobile pages) which are automatically stored in the Google AMP CDN and can be a good way to publish news and blog content.

AMP pages are denoted by a small lightning symbol in Google results on mobile devices.

However - the jury is out on the longevity of AMP pages.

Conclusion - Part Two

The technical aspects of running a website or digital channel can be overwhelming for many.

However, armed with a little knowledge, a bit of help from a dev (although maybe not this one) and a clear idea of what you want to achieve, increasing the technical performance and quality of your website is within reach of most.

And remember - not everything has to be done in one go. Improving your digital services is a process and can be done as time, budget and resources permit.

Stay tuned for Part 3 of this series which focuses on digital and content marketing.